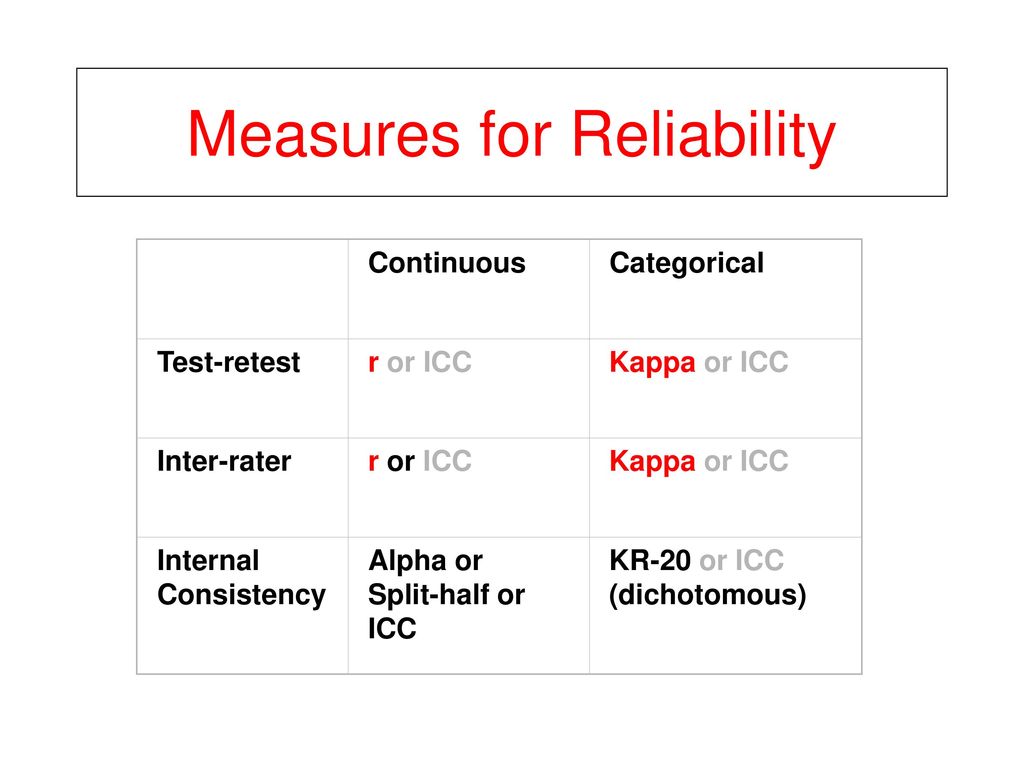

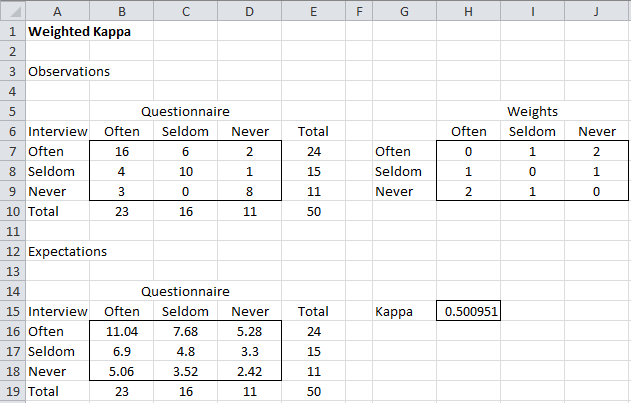

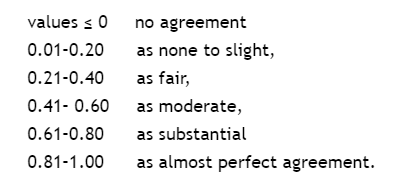

Using appropriate Kappa statistic in evaluating inter-rater reliability. Short communication on “Groundwater vulnerability and contamination risk mapping of semi-arid Totko river basin, India using GIS-based DRASTIC model and AHP techniques ...

Investigating the intra- and inter-rater reliability of a panel of subjective and objective burn scar measurement tools - ScienceDirect